The Question in Question

Question #21 on our most recent Honors Chemistry test has stolen a good deal of my mind space during this past week. If I had to guess what question on the test would provide fuel for a blog post, I certainly would not have picked this one. In fact, I would have bet that a question on a chemistry test would never prompt a post in a blog about physics lab work. But for certain, question #2 is a lab question, driving to the heart of the concepts associated with reliability of measurement. Here is question #21 from the test:

Three different measuring tools were used to measure the volume of the same sample of water. The resulting measurements are shown:

Tool A: 120 mL

Tool B: 123 mL

Tool C: 123.4 mL

What conclusion can one make about the three measuring tools?

a. The three tools have varying degrees of precision.

b. Tool A is not as accurate as tool B and tool C.

c. Tool C is the most accurate tool of the three.

d. None of the tools are accurate.

Before you read on in search of my answer, give the question some thought and make a commitment to either an answer or to the verdict that the question is a terrible question. As you think through the question, give attention to your thought process. What are your internal conceptions of accuracy and precision? What images do these terms conjure up in your mind?

Teacher Conceptions of Accuracy and Precision

What captivated me about this question was that it seems that so few of us agree about what the answer is or if there is any answer at all. My first recognition that there was a lack of agreement about the terms accuracy and precision was when I checked my test key against a colleague's. We disagreed about the answer to question #21. Being intrigued (and concerned), I asked two of my respected colleagues what their answer would be. Once more, there was disagreement. The count was 2:2 and my intrigue over the question and the conceptions that we hold regarding accuracy and precision grew. And so I began an informal survey of several colleagues (approximately 13 others) in our science department. I presented them with question #21 and asked them how they would answer it. I found that my science teaching colleagues fell into three categories.

The first category is Category C - those who answered C with very little reservation. As they thought out loud about the question, they commented that the answer is definitely not A. Their conception of precision was that an instrument was precise if the measurements that it took were reproducible. Since there was only one measurement made with each tool, there is no way to evaluate the precision of the three instruments. Multiple measurements with the same tool would be required in order to evaluate the precision of the tool. For Category C teachers, precision had to do with reproducibility and without multiple measurements from the same instrument, there is no way to judge an instrument's precision. Yet because Tool C was able to make measurements with a higher number of significant digits, it was the tool that could make the most accurate measurement.

The second category is Category N - those who responded by saying there is no answer to the question. A couple of teachers within this category responded loudly with That's a terrible question! and Where did you get this question? comments. Like Category C teachers, Category N teachers were unable to make a decision regarding the precision of the tools. Once more, their conception of precision had to do with reproducibility and since only one measurement was made with each tool, their precision could not be compared. But Category N teachers had an additional problem with evaluating the accuracy of the tools since "the real volume" or "the true reading" or "the actual amount" was not stated in the question. For Category N teachers, their conception of accuracy had to do with proximity of a measurement to the true, real, or actual value. When I probed a bit about what was meant by true,real, or actual, I eventually was able to elicit the phrase accepted value from these teachers. Because of the lack of information required to evaluate both the precision and the accuracy of the tools, these teachers regarded the question as a bad question that had no answer.

The third category is Category A - those who answered that A was the correct response. Like Category N teachers, Category A teachers believed that there was a need for knowledge of the accepted volume of the sample in order to judge the accuracy of the tool. As such, these teachers ruled out choice B, C and D as possible answers. But these teachers quickly gravitated towards answer A. Their conception of precision had nothing to do with reproducibility. Their conception of precision had to do with exactness of the tool. The number of division present on the tool was the critical feature that marked a tool as being precise. The more divisions that were present on the tool, the more precise the measurement. For Category A teachers, a tool was precise if it was able to make a measurement to a greater number of significant digits.

Three of the Category A teachers had difficulty comparing the precision of tool A and tool B. For these teachers, it seemed that both tool A and tool B could make measurements of the volume to three significant digits. They admitted that their understanding of significant digits was stale and they were unsure of the rule regarding the significance of trailing zeroes in a measurement. If the topic came up and the rule was clarified (trailing zeroes are only considered significant if a decimal place is present in the number), these teachers decisively chose A as the answer. All three teachers were physics teachers and significant digits are given significantly less attention (if any at all) by physics teachers in our department.

The Dart Board Analogy

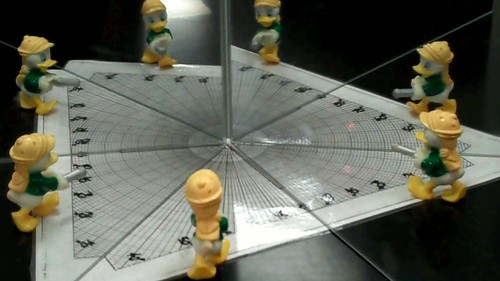

The most interesting aspect of my dialogue with the 16 science teachers was the frequency with which they referenced a particular analogy as they approached the question. In our post-survey discussions, every one of these teachers discussed the dart board or bull's-eye analogy. The dart board or bull's-eye analogy is commonly found in chemistry textbooks when discussing the distinction between accuracy and precision. It seems to be the common modus operandi of both textbook authors and classroom teachers in explaining how accuracy and precision are different.

Of the seven chemistry teachers that I surveyed, all but one of those teachers explicitly referenced the dart board analogy. And each one that did was either a Category C or a Category N teacher. Ingrained in their mind was the conception of precision as being equivalent with reproducibility. Only one chemistry teacher was a Category A teacher; when reading the question, this teacher did not conjure up a picture of a dart board, but rather conjured up images of volumetric measuring tools with varying amounts of divisions.

Six of the eight physics teachers that I surveyed were Category A teachers. These six physics teachers did not equate precision with reproducibility. In our post-survey discussion, each of these Category A teachers mentioned the dart board analogy but did not feel that the analogy was significant to the question. One of the physics teachers was a Category C teacher; he quickly referenced the dart board analogy as he reasoned through to his answer. In fact, he commented that the thing he remembers most about chemistry class was the dart board analogy. The other non-Category A physics teacher explicitly defined accuracy (proximity to a target value) and precision (reproducibility) in a manner consistent with the dart board analogy. This teacher was a Category N teacher.

Is the Dart Board Analogy On Target?

My intrigue over this question was intensified by the realization of the power of an analogy on teachers' thought processes. The analogy dominated the thinking of nearly every chemistry teacher as they approached this question. As one colleague put it, "Every chemistry textbook defines precision as reproducibility using the dart board analogy."

So what is this dart board analogy? And why is it so popular? Perhaps the most concise and representative presentation of the dart board or bull's eye analogy can be found at http://celebrating200years.noaa.gov/magazine/tct/tct_side1.html. If unfamiliar with the analogy, take some time to review it if you are unfamiliar with it. As you read through the analogy, ask yourself if you agree with it. Is the dart board analogy on target when it comes to the presentation of the concepts of accuracy and precision? Then come back next week to the Lab Blab and Other Gab blog as I take aim at the dart board analogy.

This article is contributed by Tom Henderson. Tom currently teaches Honors ChemPhys (Physics portion) and Honors Chemistry at Glenbrook South High School in Glenview, IL, where he has taught since 1989. Tom invites readers to return next week as he continues to gab about accuracy and precision. Tom plans to take aim at the dart board analogy and hopes to provide some accurate and precise discussion about topics of measurement.